This article discusses how raw motion data that has been obtained through motion capture can be processed to extract higher level movement attributes.

Raw Motion Capture Data

The data obtained through motion capture typically represents a temporal sequence of positions and rotations of body joints. Joint positions are represented as three-dimensional vectors. Joint orientation are represented as quaternions which are four dimensional vectors out of which three dimensions are complex numbers. This type of data lends itself to reconstruct a so called skeleton representations of poses.

Physical Quantities

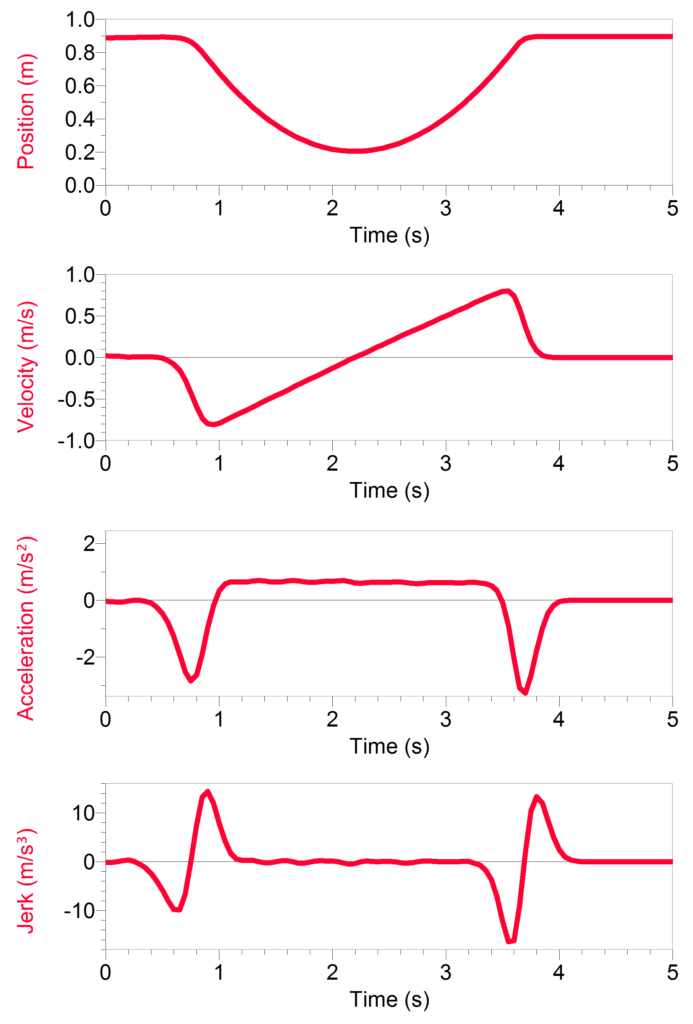

Information about body motion can only be indirectly obtained by calculating how joint positions and orientations change from frame to frame. Some of the more intuitively understandable physical quantities that describe aspects of motion are velocity, acceleration, and jerk. Velocity describes how positions change over time, acceleration describes how velocity changes over time, and jerk describes how acceleration changes over time.

There are two types of motion data that are of interest in motion capture. One deals with how joints positions change over time and the other how joint rotations change over time. In the first case, we are dealing linear derivatives such as linear velocity, linear acceleration, and linear jerk. In the second case, we are dealing with angular derivatives, i.e. angular velocity, angular acceleration, and angular jerk.

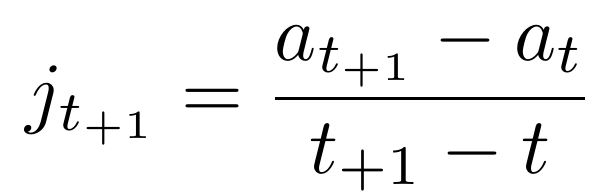

Linear derivatives can easily be obtained by calculating the discrete derivative of the joint positions with regards to time. The equations for this are as follows:

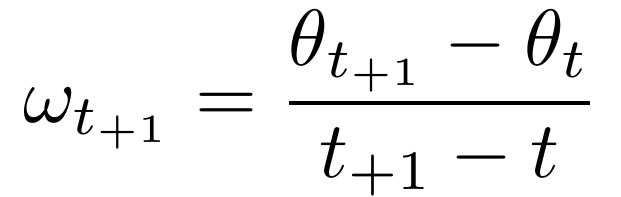

Angular derivatives can be obtained in a similar manner by calculating the discrete derivative of the joint orientations with regards to time. The equations for this are as follows:

Laban Effort Factors

While physical quantities of motion are easy to compute from raw motion capture data, these quantities are not necessarily particularly meaningful for dance. Dancers and choreographers often think about body movement in terms of more qualitative aspects such as its expressive, aesthetic, or communicative properties. An article on movement representation provides information about some aspects of movements that dancers we worked with refer to. There is also an article available that describes more general movement qualities in dance.

For the purpose of movement analysis, the formalisations introduced by the Laban Movement Analysis (LMA) system has informed fields such as robotics and human computer interaction. Central to LMA are four categories that formalise different aspects of the human body: Body, Space, Shape, and Effort.

The Effort category describes aspects that relate to the dynamics, energy, and inner intention of movement, all of which contribute to the expressivity of movement. This category is subdivided into four Effort Factors: Space, Weight, Time, Flow. Each Factor possesses two opposing dimensions.

- The Space factor describes the directedness of movement which can either be Direct or Indirect.

- The Weight factor describes the strength of movement which can either be Light or Strong.

- The Time factor describes the urgency of movement which can either be Sustained or Sudden.

- The Flow factor describes the continuity of movement which can either be Free or Bound.

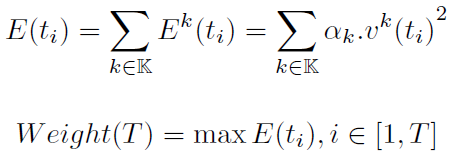

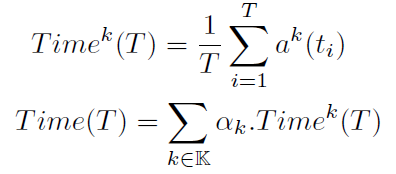

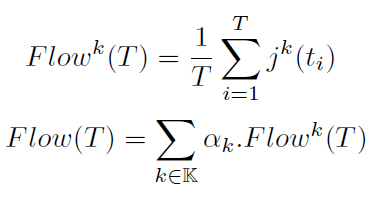

Several researchers have proposed algorithms for deriving numerical representations of LMA categories from physical descriptors of motion. A particularly exhaustive review of algorithms for computing expressive movement descriptors has been published at the Movement and Computing conference in 2015 by Larboulette and Gibet. This review includes algorithms that have been proposed for calculating the four Laban Effort Factors. The equations provided here are directly taken from this publication. The symbols employed in these equations are as follows: x refers to position, v to linear velocity, a to linear acceleration, j to linear joint, t to time, T to time window length, k to joint index, and α to joint mass.

One important point to note is that the equations consist of two parts. One of these parts (usually the second) sums the contribution of all the joints to an Effort Factor by scaling them with the joints’ masses. Obtaining reasonable estimates for these masses is not easy, especially when dealing with motion capture recordings that contain many joints. For the purpose of biomechanical modelling, several tables have been published that contain empirically obtained values for key body parts. A good summary of such tables is provided by Ramachandran et al. If accuracy is not major concern, then the weights of motion capture joints can be guessed from such tables.

As part of the E2-Create project, a small movement analysis library has been developed as addon for the Openframeworks creative coding environment. This library provides functionality to calculate both physical motion quantities and Laban Effort Factors in real-time from a motion capture stream of data. This library includes configurations files containing estimates of joint masses for the Qualisys and Captury motion capture systems. This library is available here.